How to Drastically Reduce AWS S3 Costs with Intelligent-Tiering and Lifecycle Policies for Infrequently Accessed Data

Amazon S3 (Simple Storage Service) stands as a cornerstone for data storage. From hosting static websites and storing application backups to serving as a data lake for analytics, S3’s versatility is unparalleled. However, as data volumes grow exponentially, so too can the associated costs. Many organizations find themselves grappling with unexpectedly high S3 bills, often due to data residing in storage classes that don’t match its access patterns.

This comprehensive guide will demystify AWS S3 cost optimization, focusing specifically on two powerful features: S3 Intelligent-Tiering and S3 Lifecycle Policies. We’ll explore how to strategically leverage these tools to drastically reduce your AWS S3 costs, particularly for infrequently accessed data, without compromising availability or durability. By the end of this article, you’ll have a clear, actionable roadmap to implement these strategies and unlock significant savings.

The Hidden Cost of Unoptimized S3 Storage

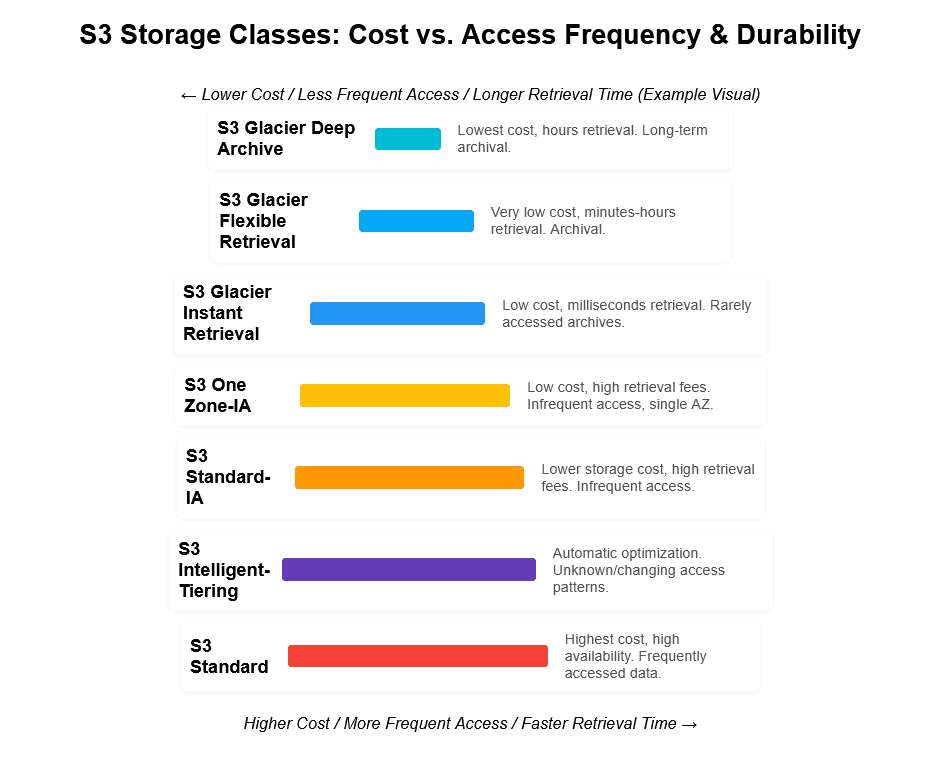

Before diving into solutions, it’s crucial to understand why S3 costs can escalate. AWS offers various S3 storage classes, each designed for different access patterns and performance requirements, with corresponding pricing models.

- S3 Standard: Default choice, high availability, frequently accessed data. Highest per-GB storage cost.

- S3 Standard-IA (Infrequent Access): Lower storage cost than Standard, but higher retrieval fees. Ideal for data accessed less frequently but requiring rapid access when needed.

- S3 One Zone-IA: Similar to Standard-IA but data is stored in a single Availability Zone. Lowest durability, but cheapest for infrequent access. Not recommended for critical data.

- S3 Glacier Instant Retrieval: Low-cost archive storage that provides milliseconds retrieval.

- S3 Glacier Flexible Retrieval (formerly S3 Glacier): Even lower cost, but retrieval can take minutes to hours. Suitable for archival data where occasional access is needed.

- S3 Glacier Deep Archive: The absolute lowest-cost storage, with retrieval times of hours. Designed for long-term data archival, like regulatory compliance data.

Introduction to S3 Intelligent-Tiering: The Smart Optimizer

S3 Intelligent-Tiering is a unique S3 storage class designed to automatically optimize storage costs by moving objects between two access tiers when access patterns change. It’s like having an intelligent butler for your data, constantly monitoring its usage and moving it to the most cost-effective tier without any manual intervention, performance impact, or operational overhead.

How S3 Intelligent-Tiering Works:

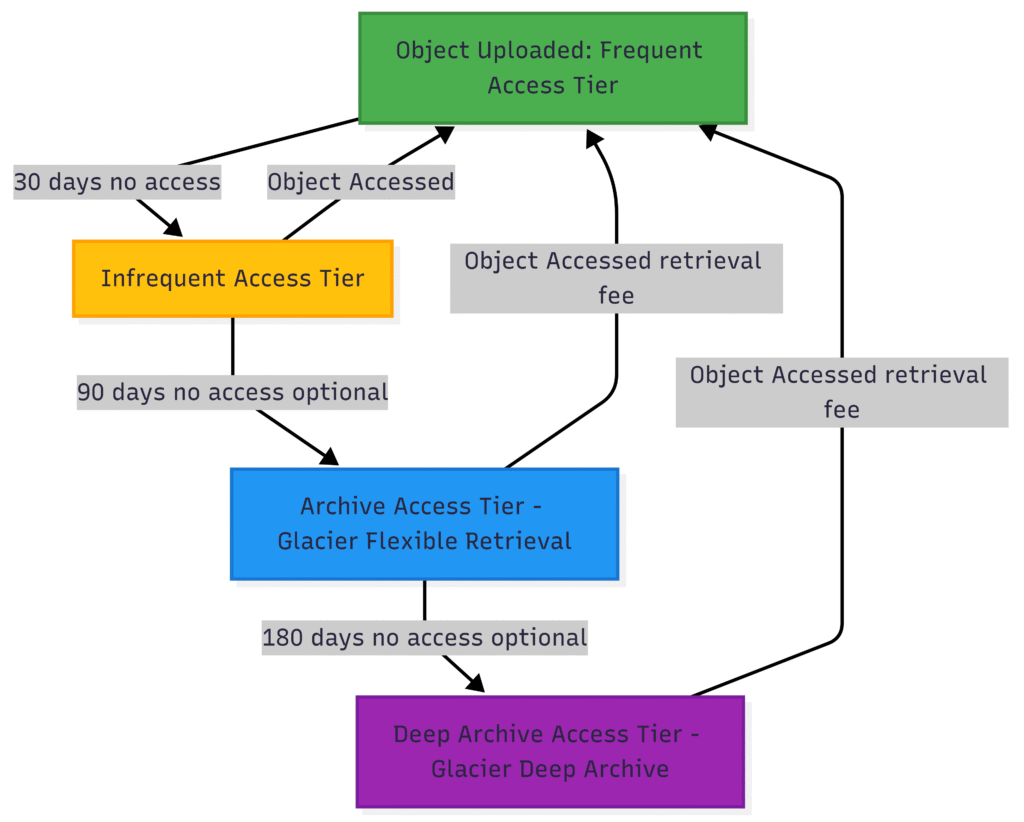

- Frequently Accessed Tier: When you upload an object to S3 Intelligent-Tiering, it starts in the Frequent Access tier, which is comparable in cost and performance to S3 Standard.

- Infrequently Accessed Tier: If an object hasn’t been accessed for 30 consecutive days, S3 Intelligent-Tiering automatically moves it to the Infrequent Access tier. This tier has a lower storage cost than the Frequent Access tier, similar to S3 Standard-IA.

- Future Access: If the object is subsequently accessed again, it’s automatically moved back to the Frequent Access tier. There are no retrieval fees when moving between these two tiers.

- Optional Archival Tiers: For even greater savings, you can configure S3 Intelligent-Tiering to include two archive access tiers:

- Archive Access Tier: If an object hasn’t been accessed for 90 consecutive days, it can be moved to this tier (similar to S3 Glacier Flexible Retrieval).

- Deep Archive Access Tier: If an object hasn’t been accessed for 180 consecutive days, it can be moved to this tier (similar to S3 Glacier Deep Archive). Retrieval from these archive access tiers incurs standard Glacier retrieval costs.

When to Use S3 Intelligent-Tiering:

S3 Intelligent-Tiering is the ideal solution when:

- You have data with unknown or changing access patterns.

- You want to automate cost optimization without manual effort.

- You need immediate access to data, regardless of its tier.

- You want to avoid retrieval charges for changing access patterns within the Frequent and Infrequent Access tiers.

It’s particularly effective for data lakes, analytics platforms, user-generated content, or any application where data access predictability is low.

Deep Dive into S3 Lifecycle Policies: The Rule Enforcer

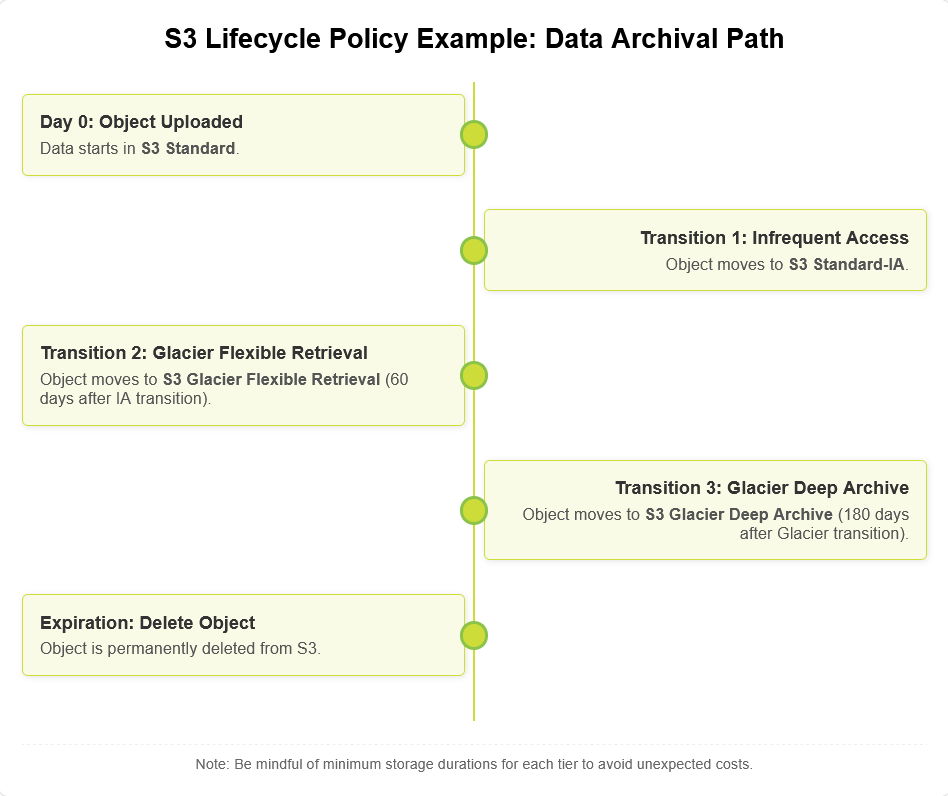

While S3 Intelligent-Tiering handles dynamic access patterns, S3 Lifecycle Policies are your go-to for data that follows predictable access patterns over its lifespan. Lifecycle policies define rules for objects to transition to different storage classes or to expire (delete) after a specified period. This is perfect for cost optimization for infrequently accessed data where you know its “cold” point in advance.

Two Types of Lifecycle Policy Actions:

- Transition Actions:

- Define when objects should be moved from one S3 storage class to another.

- Example: Move objects from S3 Standard to S3 Standard-IA after 30 days, and then to S3 Glacier Flexible Retrieval after 90 days.

- Crucial for long-term data archiving and reducing costs for data that becomes less frequently accessed over time.

- Expiration Actions:

- Define when objects should be permanently deleted.

- Example: Delete temporary log files after 7 days, or expired user sessions after 30 days.

- Important for data retention policies, compliance, and preventing unnecessary storage of outdated data.

Common Use Cases for S3 Lifecycle Policies:

- Versioned Objects: Managing costs for previous versions of objects in a versioned S3 bucket. You can transition older versions to cheaper tiers or expire them.

- Incomplete Multipart Uploads: Cleaning up abandoned multipart uploads that incur storage costs.

- Log Files: Automatically moving old application or server logs to archive storage or deleting them after a certain period.

- Backups & Snapshots: Tiering older backups to S3 Glacier or Glacier Deep Archive for long-term retention.

- Temporary Data: Automatically deleting data that is only needed for a short period.

Key Considerations for Lifecycle Policies:

- Minimum Storage Durations: Each S3 storage class has a minimum billing duration. For instance, moving an object to S3 Standard-IA, Glacier Instant Retrieval, or One Zone-IA will incur charges for a minimum of 30 days, even if you delete it sooner. For Glacier Flexible Retrieval and Deep Archive, the minimum is 90 days and 180 days respectively. Factor this into your transition rules to avoid unexpected charges.

- Transition Costs: There are small request charges when objects are transitioned between storage classes. These are usually negligible compared to the storage savings, but it’s good to be aware.

- Filtering: You can apply lifecycle rules to an entire bucket, or filter them based on object prefixes (folders) or object tags. This allows for granular control over different datasets within the same bucket.

- Non-Current Versions: When dealing with versioning enabled buckets, remember to apply separate rules for current versions and non-current versions if you want to manage their lifecycles differently.

Practical Implementation: Step-by-Step Guide

Let’s walk through how to configure these powerful cost-saving features in the AWS Management Console.

1. Implementing S3 Intelligent-Tiering:

You can enable Intelligent-Tiering when uploading objects or by changing the storage class of existing objects.

Step-by-Step for New Uploads:

- Navigate to S3: Open the AWS Management Console, search for “S3,” and select the service.

- Choose or Create Bucket: Select the S3 bucket where you want to store your data, or create a new one.

- Upload Objects: Click “Upload” and add your files.

- Set Properties: In the “Properties” section of the upload wizard, under “Storage Class,” select “Intelligent-Tiering.”

- Review and Upload: Review your settings and click “Upload.”

Step-by-Step for Existing Objects:

- Navigate to S3 Bucket: Go to your S3 bucket.

- Select Objects: Select the objects or folders whose storage class you want to change.

- Actions Menu: Click on the “Actions” dropdown menu.

- Edit Storage Class: Select “Edit storage class.”

- Choose Intelligent-Tiering: In the dialog box, choose “Intelligent-Tiering” and click “Save changes.”

Configuring Archive Access Tiers (Optional but Recommended for Max Savings):

For maximum savings, especially for data that might become very cold, you should enable the optional archive access tiers within Intelligent-Tiering.

- Navigate to S3 Bucket: Go to your S3 bucket.

- Management Tab: Click on the “Management” tab.

- Intelligent-Tiering Configuration: Under “Storage Class Analysis, Replication and Object Lock,” you’ll see a section for “Intelligent-Tiering Archive configurations.”

- Create Configuration: Click “Create configuration.”

- Configure Tiers:

- Give your configuration a name.

- You can apply it to the entire bucket or filter by prefix or tags.

- Check “Enable Archive Access tier” and specify the number of days (e.g., 90 days) after which objects move to Glacier Flexible Retrieval.

- Check “Enable Deep Archive Access tier” and specify the number of days (e.g., 180 days) after which objects move to Glacier Deep Archive.

- Create: Click “Create configuration.”

Once configured, S3 Intelligent-Tiering will automatically manage your data movement between the specified tiers, requiring no further intervention from your side. This automatic cost optimization is a significant advantage.

2. Implementing S3 Lifecycle Policies:

Lifecycle policies are defined at the bucket level and can apply to all objects or specific subsets.

Step-by-Step Guide:

- Navigate to S3 Bucket: Open your S3 bucket.

- Management Tab: Click on the “Management” tab.

- Lifecycle Rules: Under “Lifecycle rules,” click “Create lifecycle rule.”

- Rule Configuration:

- Lifecycle rule name: Give your rule a descriptive name (e.g., “ArchiveLogsAfter30Days”).

- Choose a rule scope:

- Apply to all objects in the bucket: Simplest, but applies universally.

- Limit the scope of this rule using one or more filters: Recommended for granular control. You can filter by Prefix (e.g.,

logs/,backups/) or by Tags (e.g.,environment:dev,data_type:archive).

- Lifecycle rule actions:

- Transition current versions of objects between storage classes: Select this to move data.

- Transition noncurrent versions of objects between storage classes: Essential for versioned buckets.

- Expire current versions of objects: Delete current objects.

- Expire noncurrent versions of objects: Delete old versions.

- Delete expired object delete markers or incomplete multipart uploads: Cleanup.

- Configure Transition Actions (Example: Standard to Standard-IA):

- If you selected “Transition current versions of objects,” click “Add transition.”

- Choose storage class: Select “Standard-IA.”

- Days after creation: Specify the number of days after object creation (e.g.,

30). - You can add multiple transitions (e.g., Standard-IA to Glacier Flexible Retrieval after 90 days total).

- Configure Expiration Actions (Example: Delete after 365 days):

- If you selected “Expire current versions of objects,” specify the number of days after object creation (e.g.,

365).

- If you selected “Expire current versions of objects,” specify the number of days after object creation (e.g.,

- Review and Create Rule: Review all your settings carefully. Ensure the minimum storage durations are accounted for if transitioning. Click “Create rule.”

Example Lifecycle Rule Scenarios:

- Log Archiving:

- Scope: Prefix

logs/ - Action 1 (Transition): Current version to S3 Standard-IA after 30 days.

- Action 2 (Transition): Current version to S3 Glacier Flexible Retrieval after 90 days.

- Action 3 (Expiration): Current version expires after 365 days.

- Scope: Prefix

- Backup Retention:

- Scope: Prefix

backups/ - Action 1 (Transition): Current version to S3 Glacier Deep Archive after 180 days.

- Action 2 (Expiration): Current version expires after 5 years (1825 days).

- Scope: Prefix

- Temporary Data Cleanup:

- Scope: Prefix

temp/ - Action (Expiration): Current version expires after 7 days.

- Scope: Prefix

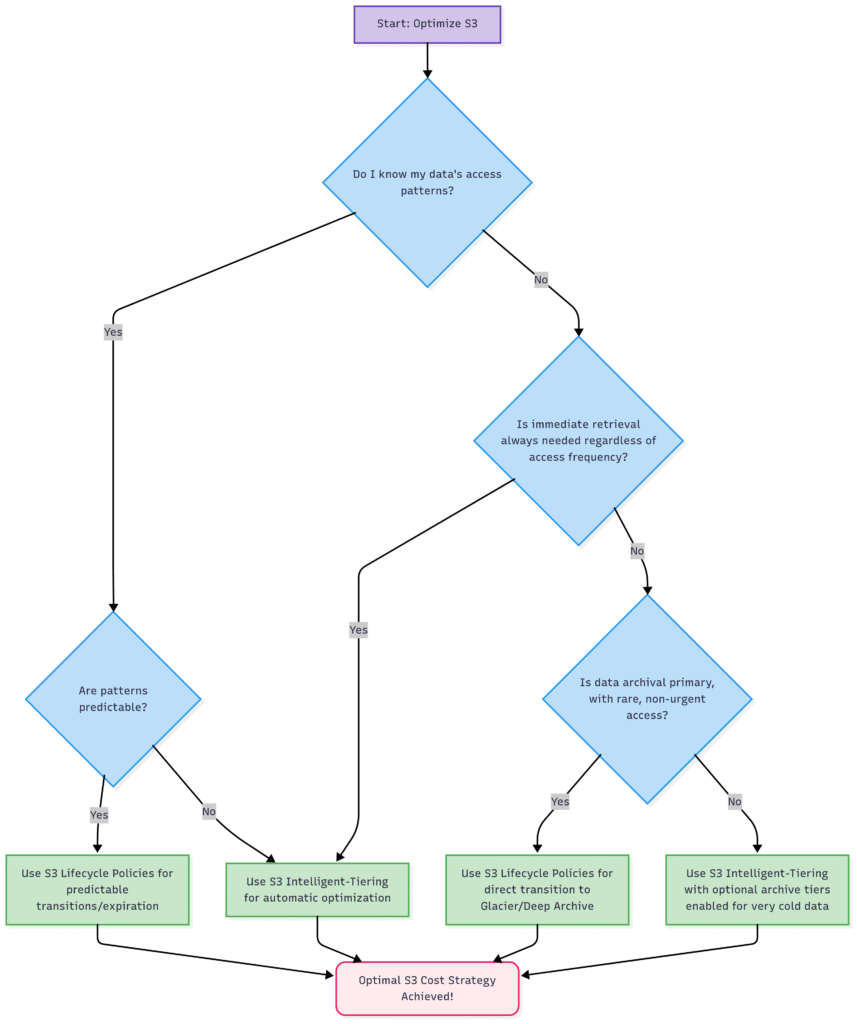

Comparing S3 Intelligent-Tiering and S3 Lifecycle Policies: When to Use Which?

While both aim for S3 cost reduction, they serve different purposes:

| Feature/Criteria | S3 Intelligent-Tiering | S3 Lifecycle Policies |

| Access Pattern | Unknown or changing (data goes hot/cold unpredictably) | Predictable (data becomes less frequent over time or needs to be deleted) |

| Automation | Fully automatic based on access patterns | Manual rule definition; then automatic execution of rules |

| Operational Overhead | Zero once configured | Requires initial setup and ongoing review of rules |

| Retrieval Fees | None for tiering between Frequent/Infrequent access tiers | Standard retrieval fees for Glacier tiers |

| Minimum Billing Duration | 30 days for Infrequent Access tier | Applies to all transitions (e.g., 30 days for IA, 90/180 for Glacier) |

| Best For | Data lakes, user-generated content, analytics, dynamic archives | Logs, backups, regulatory archives, temporary files, versioned data |

| Granularity | Applied to objects/prefixes/tags; monitors individual object access | Applied to objects/prefixes/tags; rule-based on age |

Imp Notes:

- Use S3 Intelligent-Tiering when you don’t know your data access patterns upfront or they are highly variable. It’s the “set it and forget it” option for dynamic cost optimization.

- Use S3 Lifecycle Policies when you have a clear understanding of your data’s lifecycle and access patterns change predictably over time, or when you need to enforce retention/deletion policies.

In many cases, a hybrid approach combining both can yield the greatest savings. For example, you might use Intelligent-Tiering for new, active data, and then after a certain period, use a Lifecycle Policy to transition it to a deep archive tier if you know it will rarely (if ever) be accessed again.

Advanced Tips and Best Practices for S3 Cost Savings

Analyze Your Current S3 Usage:

Before making changes, use AWS Cost Explorer and S3 Storage Class Analysis to understand your current data distribution across storage classes and identify hot/cold data. This is crucial for informed decision-making. S3 Storage Class Analysis can even recommend Intelligent-Tiering or Standard-IA for specific datasets.

Enable S3 Versioning (with caution):

While versioning provides excellent data protection and recovery, it stores every version of an object, which can quickly inflate costs. Always pair versioning with a Lifecycle Policy to manage non-current versions, transitioning them to cheaper storage or expiring them after a set period.

Monitor Your Bills:

Regularly check your AWS Cost and Usage Reports (CUR) and S3 billing details to verify that your optimization strategies are yielding the expected savings. Look for line items related to storage, requests, and data transfers.

Use S3 Storage Lens: S3 Storage Lens

provides organization-wide visibility into S3 usage and activity trends, offering actionable recommendations for cost efficiency and data protection.

Clean Up Incomplete Multipart Uploads:

Partially uploaded files can consume storage. Use a lifecycle rule to abort incomplete multipart uploads after a few days (e.g., 7 days) to avoid unnecessary charges.

Review Old Data:

Periodically review your S3 buckets for old, unused, or orphaned data that can be deleted or moved to the absolute cheapest storage tiers.

Consider Data Duplication:

If you have duplicate data across multiple buckets or regions, consolidate where possible, or ensure each copy is optimized for its specific use case.

Understand Request and Data Transfer Costs:

While this article focuses on storage costs, remember that request costs (GET, PUT, LIST operations) and data transfer costs (especially egress) can also contribute significantly to your S3 bill. Optimization efforts should consider these as well. Intelligent-Tiering minimizes retrieval fees between its common tiers, but data transfer out of AWS is always charged.

Conclusion: A Smarter Way to Manage Your Data and Dollars

AWS S3 cost optimization is not a one-time task but an ongoing process. By strategically implementing S3 Intelligent-Tiering and S3 Lifecycle Policies, you gain powerful tools to automatically or predictably reduce your storage expenses, especially for infrequently accessed data.

Intelligent-Tiering provides a hands-off, adaptive solution for dynamic data, ensuring you’re always paying the optimal price. Lifecycle Policies offer precise control for data with predictable lifespans, enabling efficient archiving and timely deletion.

Embracing these strategies will not only reduce your AWS bill but also streamline your data management, enhance your cloud governance, and free up resources for innovation. Start by analyzing your current S3 usage, identify your data access patterns, and then apply the appropriate combination of Intelligent-Tiering and Lifecycle Policies. Your wallet (and your cloud budget) will thank you!

👉 Ask our Educational Tutor now!