Troubleshooting Common AWS Lambda Cold Start Issues: Strategies for Performance Optimization

AWS Lambda has revolutionized application development by enabling serverless architectures, allowing developers to run code without provisioning or managing servers. This “pay-as-you-go” model and inherent scalability make it incredibly attractive. However, one of the most frequently encountered performance challenges in Lambda-based applications is the “cold start”.

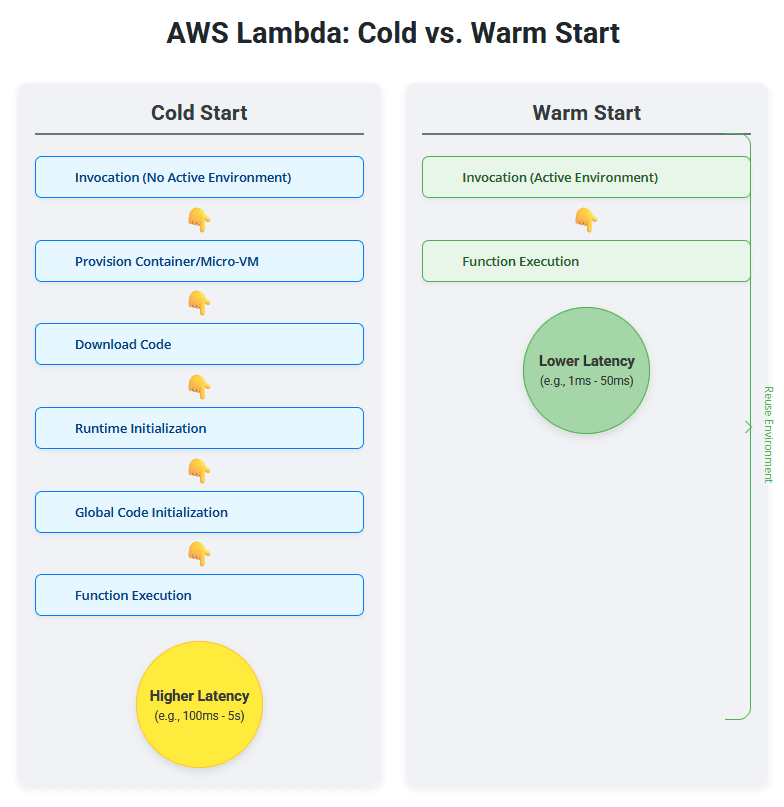

A cold start occurs when Lambda needs to fully initialize a new execution environment for your function. This involves downloading your code, setting up the runtime, and then executing your function code. In contrast, a “warm start” reuses an existing execution environment. While the cold start phenomenon is an inherent part of Lambda’s operational model, its latency can significantly impact the user experience, especially for interactive or low-latency applications.

This article will delve into common AWS Lambda cold start issues, explain their root causes, and provide practical strategies for performance optimization to reduce Lambda invocation latency. Our goal is to equip you with the knowledge and techniques to effectively manage and minimize the impact of cold starts on your serverless applications.

Understanding the AWS Lambda Cold Start Phenomenon

To effectively troubleshoot cold starts, it’s essential to understand what happens behind the scenes. When a Lambda function is invoked:

1. Warm Start (Ideal Scenario):

- If there’s an existing execution environment (a container) that was recently used and is still active, Lambda reuses it.

- The code is already loaded, and the runtime is initialized.

- This leads to very low invocation latency (typically in milliseconds).

2. Cold Start (The Challenge):

- If there’s no active execution environment available (e.g., first invocation after a period of inactivity, scaling up to handle increased load, or deploying a new version), Lambda performs a cold start.

- The steps involved are:

- Provisioning a new execution environment: AWS finds an available server, initializes a new micro-VM or container.

- Downloading function code: Your function’s deployment package (code and dependencies) is downloaded from S3 to the new environment.

- Runtime initialization: The language runtime (Node.js, Python, Java, etc.) is loaded.

- Container startup: The underlying container starts.

- Function code initialization: Your function’s global code (anything outside the main handler function) is executed. This includes importing modules, establishing database connections, or initializing SDK clients.

- Function invocation: Finally, your handler function is executed.

The time taken for these initial setup steps (from provisioning to code initialization) contributes to the cold start latency. This can range from a few tens of milliseconds for lightweight runtimes (Node.js, Python) to several seconds for heavier runtimes (Java, .NET Core) with large dependency trees.

Common Causes of Lambda Cold Starts

Understanding the primary triggers for cold starts is the first step in mitigation:

1. Inactivity (First Invocation):

If a function hasn’t been invoked for a while (typically 10-15 minutes, but this isn’t guaranteed), its execution environment is decommissioned, leading to a cold start on the next invocation.

2. Scaling Up:

When your function experiences a surge in traffic, Lambda needs to provision new execution environments to handle the increased concurrency. Each new environment will incur a cold start.

3. Code Updates/Deployment:

Every time you deploy a new version of your Lambda function, new execution environments are spun up to serve the new code, resulting in cold starts.

4. Runtime Choice:

Some runtimes inherently have longer initialization times due to their nature (e.g., Java and .NET Core typically have larger runtimes and require more time for JVM/CLR startup compared to Node.js or Python).

5. Large Deployment Packages:

A larger .zip or container image size means more time for Lambda to download your function code to the execution environment. This includes your code, dependencies, and any layers.

6. Extensive Global Initialization Logic:

Any code outside your main handler function runs during the cold start. If this code performs heavy operations like complex calculations, network calls (e.g., to databases or external APIs), or loading large configuration files, it will significantly increase cold start time.

7. Memory Allocation:

While not a direct cause, very low memory allocation can slow down initialization as the environment struggles to provision and run components efficiently.

👉 Ask our Educational Tutor now!

Strategies for Performance Optimization: AWS Lambda Cold Start Fix and Mitigation

While eliminating cold starts entirely is impossible (it’s how Lambda works!), you can significantly reduce Lambda invocation latency and minimize their impact. Here are the key strategies:

1. Optimize Your Code and Deployment Package

This is the most fundamental and often most effective strategy.

- Minimize Deployment Package Size:

- Remove Unused Dependencies: Use tools to identify and remove libraries or modules that are not actively used by your function.

- Tree Shaking: For Node.js and JavaScript, use bundlers like Webpack or Rollup to perform tree shaking, which removes dead code from your dependencies.

- Exclude Dev Dependencies: Ensure

devDependenciesare not included in your deployment package. - Use Lambda Layers Wisely: For common libraries, create Lambda Layers. This allows Lambda to download layers separately and potentially cache them, reducing the size of your main function package.

- Optimize Global Initialization Logic:

- Move Initialization Outside the Handler: Any code that only needs to run once per execution environment (e.g., database connections, SDK client initialization, loading configuration) should be placed outside your main handler function. This ensures it’s part of the cold start initialization but not repeated on every warm invocation.

- Lazy Initialization (if applicable): If certain resources are only needed for specific execution paths, initialize them only when they are actually called.

- Avoid Complex Operations: Minimize synchronous network calls or heavy computations in the global scope.

- Choose the Right Runtime:

- Node.js and Python generally have faster cold start times due to their lightweight runtimes.

- Java and .NET Core typically have longer cold start times. If you use these, be extra diligent with other optimization strategies. Prefer newer runtime versions as AWS often optimizes them.

2. Configure Lambda Properly

Proper Lambda configuration can make a significant difference.

- Increase Memory Allocation:

- Lambda’s CPU power is directly proportional to its allocated memory. Increasing memory gives your function more CPU, which can speed up the cold start process (especially the code download and runtime initialization phases).

- Even if your function doesn’t need the memory for its core logic, increasing it slightly can lead to better performance and lower overall cost if it reduces execution duration enough to offset the higher memory price. Experiment with memory settings to find the sweet spot.

- Use Provisioned Concurrency (for predictable workloads):

- Provisioned Concurrency keeps a pre-warmed set of execution environments ready to respond to invocations with very low latency.

- This is the most direct cold start fix for critical, latency-sensitive functions with predictable traffic.

- Considerations: You pay for provisioned concurrency even when the function isn’t invoked, so it’s best for applications where consistent, low-latency performance is crucial and traffic patterns are somewhat predictable (e.g., APIs, interactive frontends).

- SnapStart for Java (JVM specific):

- For Java functions running on Corretto 11 or later, Lambda SnapStart significantly reduces cold start times. It does this by taking a snapshot of the initialized execution environment (after the global code runs) and reusing that snapshot for new invocations. This bypasses much of the typical JVM startup and initialization.

- Considerations: Ensure your application is compatible with SnapStart (e.g., no sensitive credentials or unique data initialized before the snapshot). Always test thoroughly.

3. Proactive Warming (Pinging/Scheduled Invocations)

While AWS now provides better features like Provisioned Concurrency, “pinging” or “warming” Lambda functions with scheduled invocations was a common strategy and can still be useful for non-critical functions or for testing.

- Use EventBridge (CloudWatch Events) to invoke the function on a schedule. For example, trigger your function every 5-10 minutes with a dummy event.

- Considerations: This strategy keeps environments warm but incurs invocation costs. It’s less efficient than Provisioned Concurrency for predictable loads, but can be a low-cost alternative for less critical functions or irregular traffic.

4. Monitor and Analyze

Effective monitoring is crucial to identify and troubleshoot cold start issues.

CloudWatch Metrics:

- Monitor the

Durationmetric for your Lambda functions. Spikes in duration often indicate cold starts. - Look at the

InvocationsandConcurrentExecutionsmetrics to understand traffic patterns and scaling behavior.

CloudWatch Logs Insights:

- Use CloudWatch Logs Insights to query your Lambda function logs. You can filter for logs indicating new execution environments (e.g.,

START RequestIdfollowed by an execution time significantly longer than subsequent invocations for the sameRequestId). - Look for logs from your global initialization code to identify slow points.

AWS X-Ray:

- Integrate AWS X-Ray with your Lambda functions. X-Ray provides a detailed service map and traces that show the breakdown of latency across different segments, including the Lambda initialization phase. This is incredibly powerful for pinpointing bottlenecks.

Best Practices to Minimize Cold Start Impact

- Small, Focused Functions: Design your Lambda functions to do one thing well. Smaller codebases mean smaller deployment packages.

- Avoid VPC if not essential: If your Lambda function doesn’t need to access resources within a VPC, avoid putting it there. While AWS has significantly improved VPC cold start latency, the process of attaching an ENI (Elastic Network Interface) to the execution environment during a cold start can still add a few hundred milliseconds. If you must use a VPC, ensure you have sufficient IP addresses in your subnets.

- Container Images: For very large dependencies or custom runtimes, consider using Lambda Container Images. While the initial download can be large, you have more control over the build environment and can optimize the image.

- Stay Updated: Regularly update your Lambda runtime to the latest version. AWS constantly optimizes runtimes for performance improvements.

- Consider Alternatives: For extremely latency-sensitive applications with unpredictable, spiky traffic that are heavily impacted by cold starts, evaluate alternatives like Fargate or even EC2 instances if absolute control over a warm environment is paramount, or if Provisioned Concurrency costs become prohibitive.

Conclusion: Mastering Lambda Performance

AWS Lambda cold starts are a reality of serverless computing, but they don’t have to be a crippling performance bottleneck. By understanding the underlying mechanisms and applying effective strategies for performance optimization, you can significantly reduce Lambda invocation latency and deliver a seamless experience to your users.

Prioritize optimizing your code and deployment package size, especially minimizing global initialization logic. Strategically use Provisioned Concurrency for critical workloads, and always leverage CloudWatch and X-Ray for detailed performance analysis. By following these cold start fix techniques and best practices, you’ll ensure your serverless applications remain performant, cost-effective, and robust, truly harnessing the power of AWS Lambda.